AMD Advancing AI 2025: ROCm 7 - Leveraging AI Innovation

AMD invests heavily in developing an open software ecosystem, announcing ROCm version 7 at the Advancing AI 2025 event .

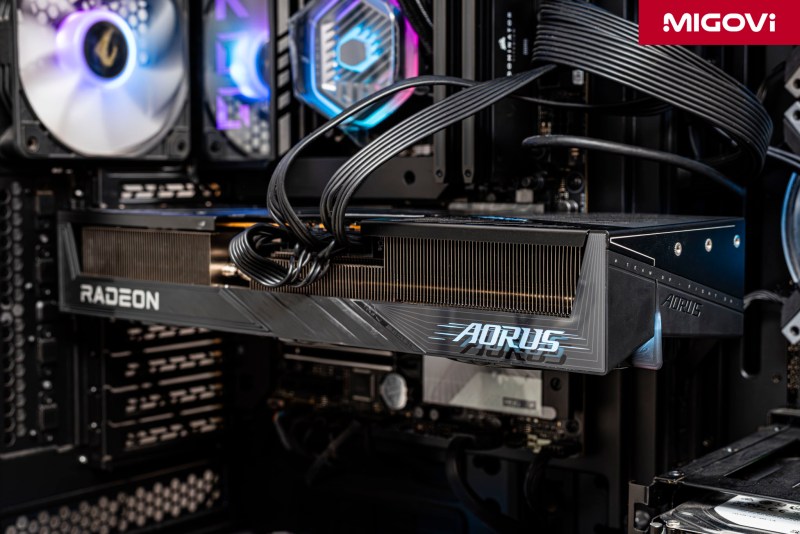

High-performance hardware is only half the story of AI success; the other half is the supporting software. The software platform plays a key role in maximizing the potential of the hardware, while creating a friendly and efficient development environment for developers. The Radeon Open Compute platform or ROCm is proof that AMD is always ready in both hardware and software, focusing on building an open ecosystem.

Article content

Performance Improvements on AMD ROCm 7

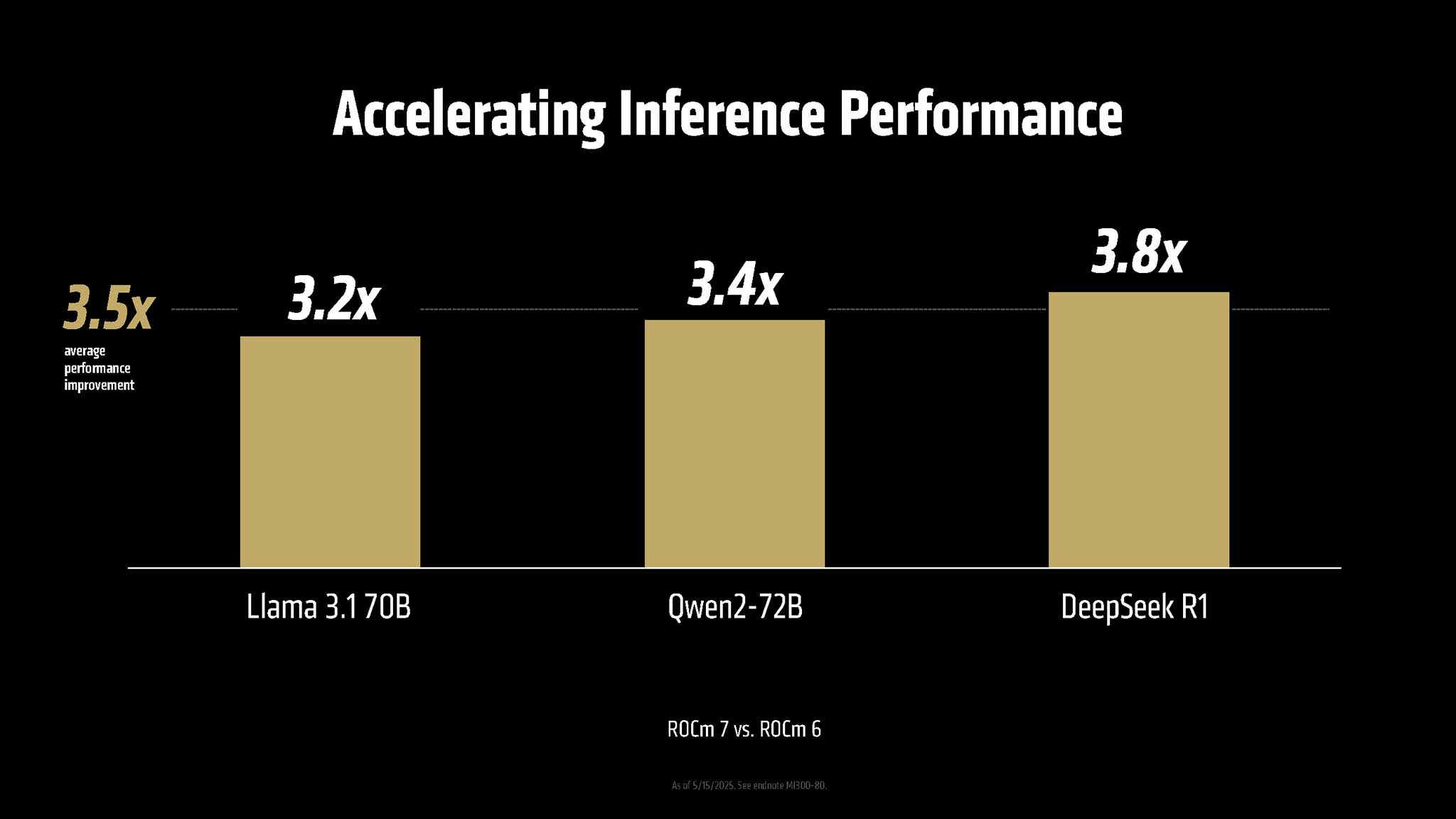

AMD introduced ROCm 7 with impressive performance claims. Compared to ROCm 6, ROCm 7 offers 3.5x better inference capability and 3x better training prowess. AMD's internal tests show significant improvements on popular AI models. For example, with the Llama 3.1 70B model, inference performance increased by 3.2x; with Qwen2-72B, this figure was 3.4x; while with DeepSeek R1, performance increased by 3.8x compared to using ROCm 6.

AMD notes that these performance leaps come from a combination of factors, including improved platform usability, low-level performance optimizations, and better support for low-precision data types like FP4 and FP6. ROCm 7 also features improvements to communication stacks that optimize GPU utilization and data movement between system components.

However, it should be noted that the performance comparison with “ROCm 6” may be AMD is comparing to the version of ROCm 6 at the time that model was first supported, not necessarily the latest stable version of ROCm 6.4.1. However, there is no denying that the performance improvements that ROCm has achieved in recent times are significant and encouraging.

ROCm 7 Features and Updates

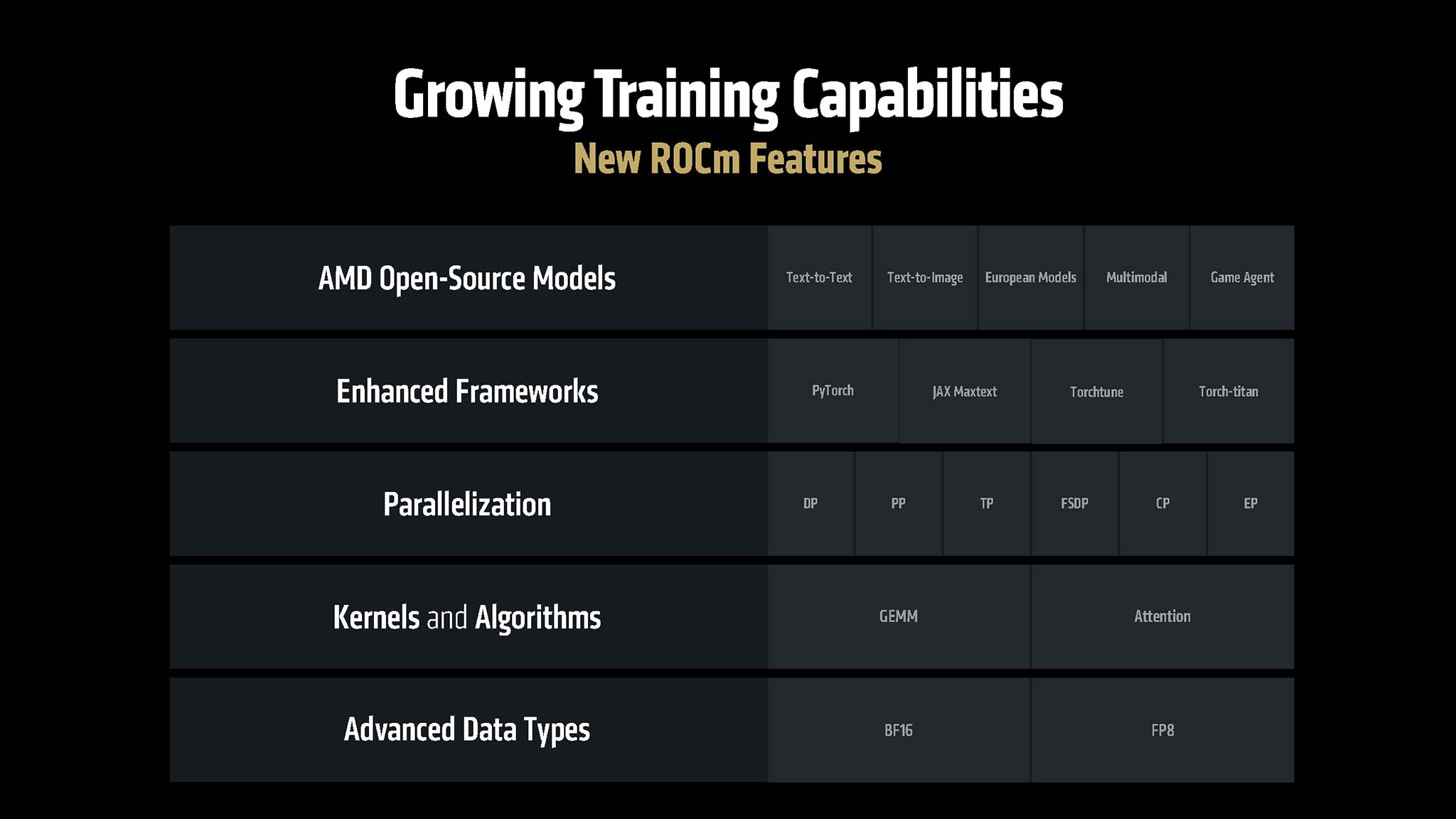

ROCm 7 focuses not only on performance but also brings a number of important features and updates. First, ROCm 7 supports new data types, enhanced support for FP8, FP6, FP4 data formats, and mixed precision computing. These are extremely important factors for optimizing performance and reducing memory requirements for modern AI inference tasks.

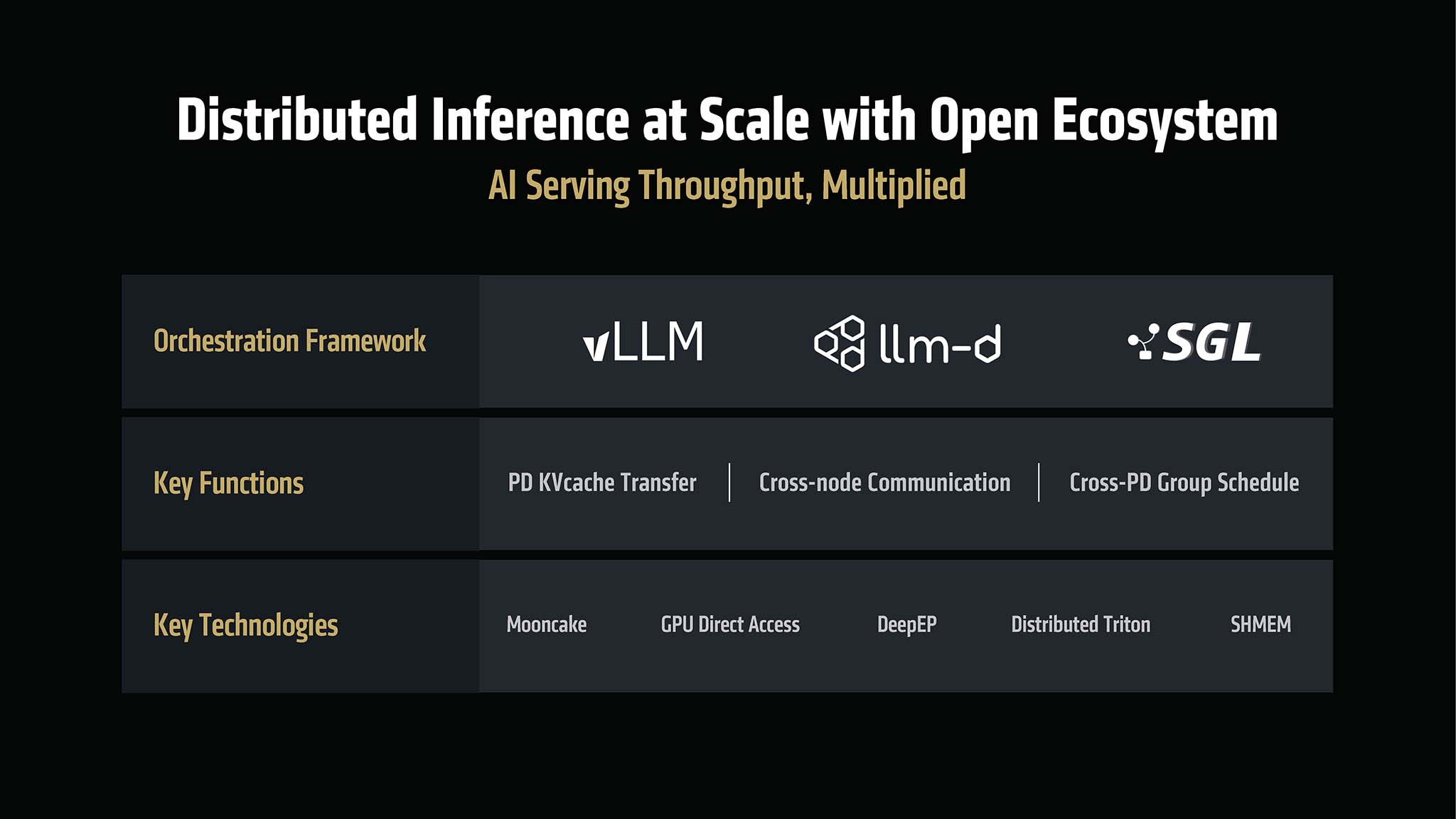

One of the highlights of ROCm 7 is its robust and open approach to distributed inference. AMD has worked closely with the open source community and popular frameworks such as SGLang, vLLM, and llm-d to co-develop common interfaces and primitives. This allows inference applications to scale efficiently across multiple AMD GPUs.

ROCm 7 adds new computational kernels and optimized algorithms, including GEMM (General Matrix Multiply) auto-tuning, support for Mixture of Experts (MoE) models, advanced attention mechanisms, and a system that allows developers to create custom kernels in Python.

The developer experience is also improved with ROCm 7. The platform is designed to meet the growing needs of Generative AI and HPC applications, while transforming the developer experience by increasing accessibility, improving productivity, and fostering community collaboration. ROCm 7 offers better support for industry-standard AI frameworks such as PyTorch and TensorFlow. In addition, ROCm 7 provides new development tools, drivers, application programming interfaces (APIs), and libraries to accelerate the development and deployment of AI solutions.

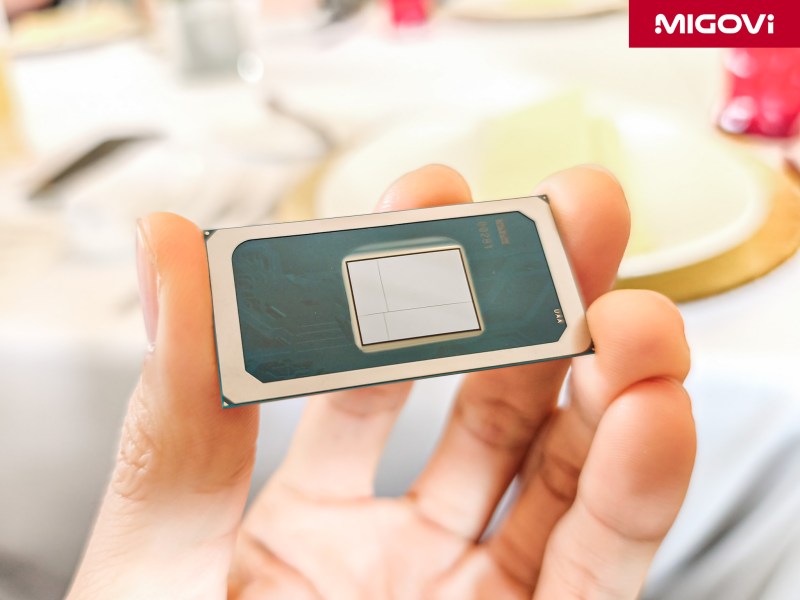

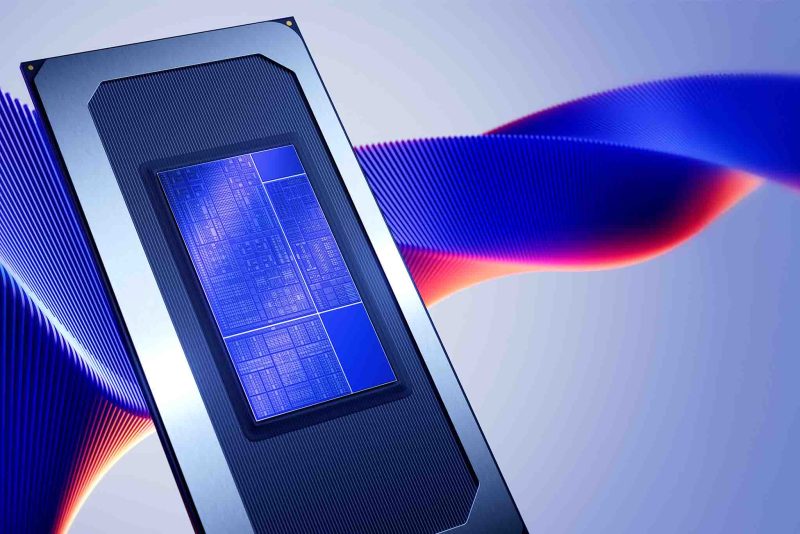

Expanded hardware and operating system support

One of the most important announcements on the Advancing AI 2025 stage related to ROCm 7 was the significant expansion of hardware compatibility and operating system support. AMD is realizing its vision of “ROCm everywhere, for everyone” by expanding ROCm support beyond the data center. Developers will soon be able to build and run AI applications on PCs with Radeon GPUs and laptops with Ryzen AI APUs. This creates a consistent development environment from the client to the cloud, expected to be widely available in the second half of 2025.

Another notable highlight is that ROCm 7 will make Windows a fully and officially supported operating system. This is a strategic move, as it opens the door for ROCm to reach a large number of developers and users who currently work primarily on the Windows environment, ensuring source code portability and efficiency across both personal and business setups.

It can be seen that ROCm is AMD's strategic weapon in the battle for AI market share, acting as a direct counterweight to the NVIDIA CUDA platform. For many years, the maturity and popularity of CUDA has been a major barrier for competitors. With ROCm 7, AMD is showing serious efforts to close this gap.

The “ROCm everywhere for everyone” strategy, along with providing tools like the HIPIFY utility (which makes it relatively easy to convert source code from CUDA to ROCm), are important steps to encourage developers to explore and migrate to AMD’s platform. Software weaknesses have long been considered one of AMD’s biggest limitations in the AI market, but with the strong improvements in ROCm 7, the situation is changing positively.

AMD Developer Cloud

AMD Developer Cloud is here to support developers and accelerate adoption of the company’s AI platform. The service provides immediate access, with no initial hardware investment, to AMD Instinct MI300 (soon to be MI350 Series) GPUs, pre-configured development environments, and free Developer Credits. Docker containers pre-installed with popular AI software significantly reduce initial setup time.

AMD Developer Cloud is expected to help reduce barriers to entry, expanding access to next-generation AI computing power for the global developer community, open source projects, especially individuals, startups or research organizations with limited resources. This is not only a service that provides computing resources, but also a strategic tool for AMD to collect early feedback from users, thereby improving the product and promoting wider adoption of the ROCm platform and Instinct hardware.

The big improvements in ROCm 7, especially in performance and expanding support to Windows and mainstream Radeon GPUs, are key to AMD’s long-term competitiveness with NVIDIA in AI. Powerful hardware is necessary, but a mature, easy-to-use, high-performance, and widely supported software ecosystem is enough to keep developers and users engaged. NVIDIA’s CUDA has a huge head start in this regard. However, if the improvements in ROCm 7 really deliver the promised competitive performance and experience, combined with the potential price/performance advantage of AMD hardware, the balance of power in the AI software market could begin to shift. Windows support would open up a huge market of users and developers that ROCm had previously been largely inaccessible to.

The “ROCm everywhere for everyone” strategy and the active support of popular open source frameworks (such as vLLM, SGLang, and the collaboration with Hugging Face) show that AMD is not only trying to build everything itself, but is also working to form a “coalition” of developers, companies, and organizations that want a truly open and powerful alternative to the CUDA platform. This approach not only speeds up the development and maturity of ROCm, but also creates a more diverse and flexible ecosystem that attracts those who support the open source philosophy and want to avoid being locked into a single vendor.

1 thought on “AMD Advancing AI 2025: ROCm 7 - Leveraging AI Innovation”