AMD Advancing AI 2025: Comprehensive AI Solutions and Product Roadmap

AMD not only develops individual hardware and software, but also aims to provide comprehensive, complete AI solutions.

In addition to comprehensive AI solutions, from the chip level to complete rack-scale systems, AMD also outlined a clear and ambitious product development roadmap for the coming years.

Article content

Rack-Scale AI System “Helios”

At Advancing AI 2025 , AMD previewed its next-generation rack-scale AI system, code-named “Helios,” which is a key part of AMD’s strategy to deliver integrated, high-performance AI solutions built on open standards.

The key components of “Helios” include: AMD Instinct MI400 Series GPUs (a new GPU family expected to deliver up to 10x higher inference performance on Mixture of Experts (MoE) models than the previous generation), AMD EPYC “Venice” CPUs (based on the Zen 6 architecture, providing superior processing power and bandwidth to support large GPU clusters), and AMD Pensando “Vulcano” NICs (the next generation of network cards after Pollara, supporting higher connection speeds, such as the 800GbE mentioned for the 2027 solution).

“Helios” is designed as a unified system, supporting a tightly connected scale-up domain, capable of integrating up to 72 MI400 Series GPUs. The system provides up to 260 TB/s of scale-up bandwidth and supports Ultra Accelerator Link (UALink) high-speed interconnect technology. UALink is an open interconnect standard, developed by a consortium of technology companies, to provide a high-speed and efficient interface for connecting accelerators in AI and HPC systems.

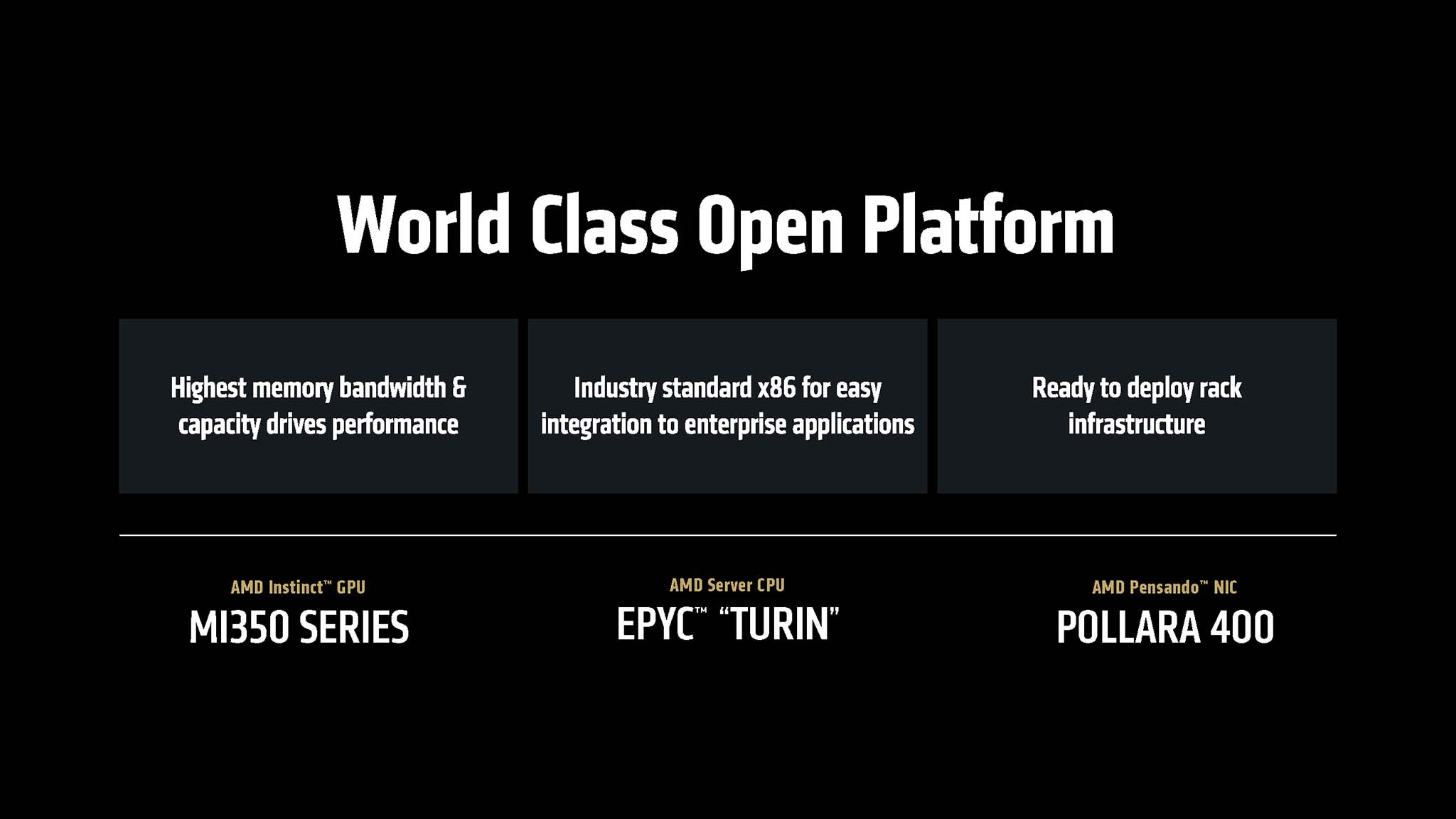

Prior to the launch of Helios, AMD has also deployed rack-scale systems based on Instinct MI350 Series GPUs, 5th Gen EPYC CPUs, and Pollara NICs. These systems have begun to be deployed by hyperscalers such as Oracle Cloud Infrastructure (OCI) and are expected to be more widely available in the second half of 2025.

AMD Verano CPU and GPU Instinct MI500X

AMD has announced its AI product roadmap, with an annual refresh cycle for its CPUs, GPUs, and rack-scale AI solutions. The 2027 plan includes the EPYC “Verano” CPU, the Instinct MI500X Series GPU, and a next-generation rack-scale AI system. Verano is a new generation of server CPUs, the successor to the EPYC “Venice,” built on the Zen 7 architecture, promising new improvements in performance and energy efficiency. Meanwhile, the Instinct MI500X Series is the next generation of the MI400 Series, bringing even higher performance in the field of AI computing. As for the next-generation rack-scale AI system after Helios, they will use the Verano CPU, MI500X GPU, and Pensando “Vulcano” network card supporting 800 GbE speeds.

Rack-scale systems in 2027 are expected to have more “compute blades,” allowing for increased performance density per rack. This suggests that AMD is aiming to deliver solutions with extreme computing power that can compete with the top systems on the market.

The 2027 production of the “Verano” CPU and MI500X GPU could coincide with TSMC’s planned rollout of its A16 (1.6 nm) process in late 2026. The A16 process is expected to incorporate backside power delivery technology – a technique that is particularly useful for high-performance data center CPUs and GPUs that require high power.

Most recently, AMD has a roadmap for developing the Instinct MI400 Series GPU (expected 2026) with new improvements. The MI400 series will be built on the CDNA-Next architecture - the next step of CDNA development. One of the most notable highlights of the MI400 is the switch to using HBM4 memory, with a capacity of up to 432 GB. This is an extremely important upgrade, allowing the processing of increasingly complex AI models that require more memory. Memory bandwidth is also expected to skyrocket to 19.6 TB/s, more than 2 times that of the MI355X. AMD expects the MI400 Series to deliver inference performance on Mixture of Experts (MoE) models up to 10 times higher than the MI300X generation. FP4 computing performance is expected to reach 40 PFLOPS and FP8 to reach 20 PFLOPS. The MI400 series will be the “heart” of the “Helios” rack-scale AI system.

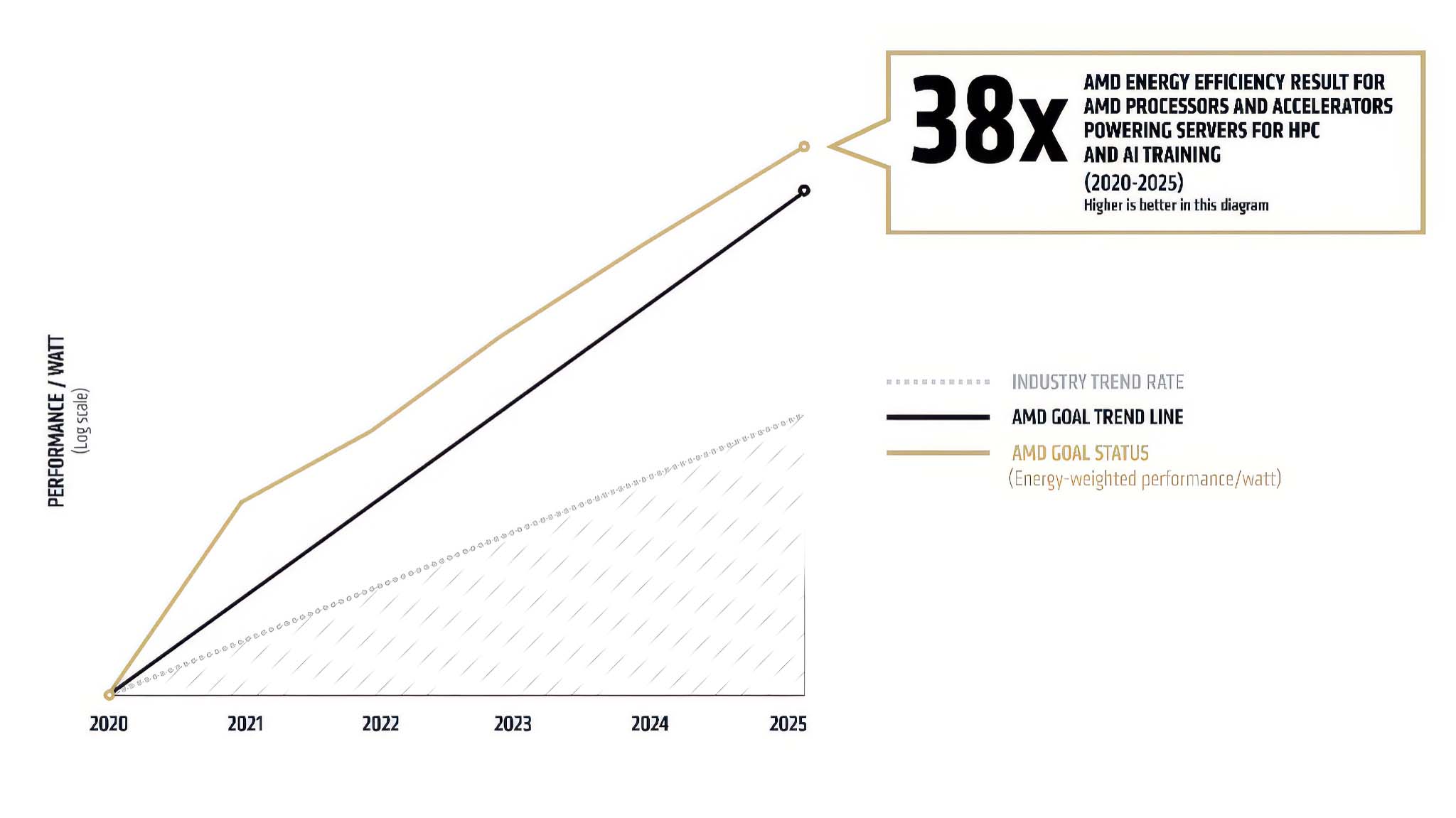

Commitment to energy efficiency

AMD is committed to improving the energy efficiency of AI solutions. The Instinct MI350 Series GPUs have surpassed AMD’s previous five-year goal of improving the energy efficiency of AI and HPC training nodes by 30x, achieving a 38x improvement over the 2020 baseline. AMD has also set an ambitious new goal for 2030: a 20x increase in rack-scale energy efficiency over the 2024 baseline. According to AMD, with this improvement, a typical AI model that today requires more than 275 racks to train could be trained in less than 1 fully utilized rack by 2030, and more importantly, consume 95% less power.

The energy efficiency goal addresses an increasingly pressing issue for the AI industry: the enormous operating costs and negative environmental impact of AI data centers’ high power consumption. Significantly improving energy efficiency will reduce the Total Cost of Ownership (TCO) for customers, make AI technology more accessible to more organizations and businesses, and address concerns about the sustainability of AI development. This could become an important competitive advantage for AMD, especially in regions with high electricity costs or increasingly stringent environmental regulations.

Open ecosystem strategy

Central to AMD’s strategy is building an “open AI ecosystem,” based on widely accepted industry standards and close collaboration with a diverse network of hardware and software partners. This includes providing hardware with open interfaces (such as support for the UALink connectivity standard for accelerators), developing a robust open source software platform ( ROCm ), and designing rack-scale solutions that comply with open standards.

The advantages of this approach are numerous. First, it has the potential to attract a broader community of developers and researchers, who often prefer open tools and platforms for the freedom to customize and innovate. Second, it helps customers avoid vendor lock-in, giving them greater flexibility and bargaining power. Third, an open ecosystem often fosters faster innovation through knowledge sharing, code sharing, and collaboration among multiple parties. This is particularly attractive to companies that want to build and deeply customize their own AI solutions, rather than just using “boxed” products.

Impact on the AI Hardware Market

Increased competition from AMD is expected to have positive effects on the entire AI hardware market. One of the most obvious benefits is the potential for AI hardware to become more affordable, making the technology more accessible to businesses and end users. With more choice in vendors and solutions, customers will be better positioned to find the products that best fit their needs and budgets.

Furthermore, competitive pressure from AMD will also push the entire AI industry to innovate faster and more aggressively. Companies will have to continuously improve their products, optimize performance, and reduce costs to maintain a competitive advantage. This will ultimately benefit end users. The development of more cost- and energy-efficient AI solutions can also expand AI adoption to new industries and developing countries where cost and infrastructure barriers were previously high.

The role of strategic partners

In the effort to build an open and competitive AI ecosystem, the role of strategic partners is extremely important. The participation and support from AI industry giants such as Meta, OpenAI, Microsoft, Oracle and xAI at the “Advancing AI 2025” event shows the growing trust in AMD solutions.

In particular, Oracle Cloud Infrastructure (OCI) announced that it will provide zettascale AI clusters (capable of performing 1021 floating point operations per second) with up to 131,072 AMD Instinct MI355X GPUs, which is a very positive signal. Such a large-scale collaboration not only brings important revenue streams to AMD, but also helps gather valuable feedback from deploying real AI workloads at extremely large scale. This feedback will be very useful for refining hardware products and optimizing the ROCm software platform, while strengthening AMD's reputation and position in the enterprise AI market and cloud service providers.